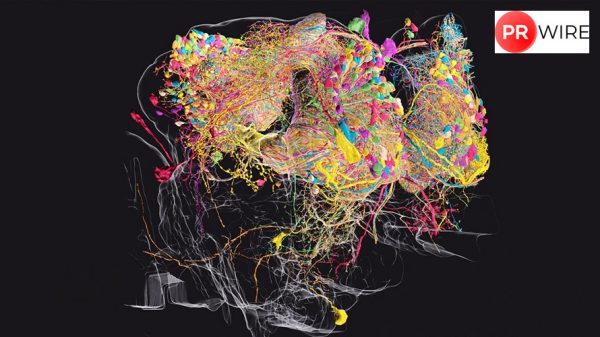

Microsoft has released its Responsible AI Transparency Report which sheds light on the steps taken by the company to launch responsible AI platforms. The report majorly covers the actions taken by Microsoft to safely deploy AI products in 2023.

As part of the report, the company states that it created 30 responsible Artificial intelligence tools in the last year.

Additionally, the report emphasizes the expansion of Microsoft’s responsible AI team and the implementation of rigorous risk assessment protocols across all stages of development for teams working on generative AI applications.

Microsoft says that it introduced Content Credentials to its image generation platforms which adds a watermark on an image, tagging it as made by an AI platform.

Microsoft has also provided Azure AI customers with enhanced tools for detecting problematic content, such as hate speech, sexual content, and self-harm, along with tools for assessing security risks.

The company is further enhancing its red-teaming initiatives, encompassing both internal red teams dedicated to stress-testing safety features in its AI models and external red-teaming applications facilitating third-party evaluations before model releases.

The article originally appeared on The Hindu.