“An AI system can be technically safe yet deeply untrustworthy. This distinction matters because satisfying benchmarks is necessary but insufficient for trust.”

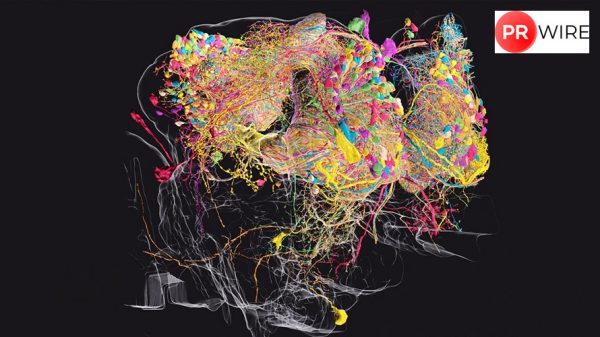

Trust and safety in artificial intelligence (AI) are fundamentally different frameworks that often produce contrasting design decisions, evaluation methods and architectural choices.

Safety focuses narrowly on preventing harm directly caused by the AI system itself, primarily through internal technical controls and security measures. It asks: Does the model produce accurate predictions? Does it resist adversarial attacks? Are data and infrastructure secure from external threats? Safety is largely an internal property, measurable through controlled testing environments and technical benchmarks.

Trust, by contrast, is a more inclusive concept that encompasses fairness, explainability, privacy, robustness, governance and social impact across the board.

A system can be technically safe yet deeply untrustworthy, and conversely, internal safety validation offers minimal assurance to external stakeholders.

The great conceptual divide

This distinction matters because satisfying internal benchmarks and technical safety standards is necessary but insufficient for building trust. Organizations can create systems that pass all security tests, achieve high accuracy on held-out data and implement robust infrastructure, yet deploy tools that can systematically disadvantage certain populations or make decisions that users cannot understand or verify.

The same dimensions of AI design — explainability, performance, fairness, privacy, and robustness — are understood and implemented entirely differently depending on whether the overarching goal is safety or trust.

1. Explainability (debugging vs understanding)

In the safety paradigm, explainability is primarily an engineering tool; developers generate explanations for model predictions to debug failures, identify weak points in the decision boundary, and diagnose where the system breaks.

Whereas, in the trust paradigm, explanations serve a fundamentally different purpose. They enable end-users and domain experts to understand decisions in their own conceptual language and verify the reasoning against their expertise.

For example, a medical diagnosis model designed for safety might explain itself to engineers through feature importance scores and attention maps.

The same model designed for trust would explain itself to physicians using clinical language, highlighting which symptoms and test results contributed to the diagnosis in ways clinicians can validate against their medical knowledge. These are not the same explanations as they reflect different audiences and different purposes.

2. Performance and benchmarks (internal testing vs verifiable proof)

Safety treats performance as something validated internally. Does the model achieve 95% accuracy on the test set? Does it withstand adversarial attacks in a lab setting?

These are engineering questions answered through controlled benchmarking.

However, trust requires external validation and verifiable proof.

For AI to be trustworthy, it demands third-party attestation: independent auditors, preferably with access to proprietary models via privacy-preserving mechanisms such as Trusted Execution Environments, who certify performance and bias characteristics.

The EU AI Act and emerging regulatory frameworks explicitly require this distinction.

3. Fairness and bias (discrimination vs equity)

Safety-oriented fairness focuses on preventing the system from explicitly using protected attributes (race, gender, age) in decision-making.

This leads to designs that exclude sensitive features or implement “fairness through unawareness” — the assumption that removing demographic data will prevent discriminatory outcomes.

This approach repeatedly fails because algorithms can detect proxy variables that encode protected characteristics — a gender-blind credit scoring system may discriminate against women if the model learns that certain phone types or app choices correlate with female identity, even if gender itself is excluded from inputs.

The algorithm’s optimization objective — predict loan default— is agnostic to the developer’s intentions; if the training data contains historical gender bias, the model will find shortcuts to that pattern. Trust-oriented fairness addresses this by requiring continuous auditing across demographic groups, testing for both direct and indirect discrimination and validating that performance generalizes equitably.

4. Privacy (data protection vs user control)

Safety-oriented privacy emphasizes protecting data from unauthorized access: encryption, access controls, secure storage, and preventing data breaches. The focus is on confidentiality — ensuring no one can access or misuse personal information.

Trust-oriented privacy centres on user agency and informed consent: Do users know what data is being collected and why? How is it used? Can they access, correct, or delete their information? Do they have a meaningful choice about what is stored?

Privacy, by design in the trust paradigm, means minimizing data collection, obtaining explicit consent for each use, implementing transparent data-handling practices, and respecting user rights regardless of technical feasibility.

5. Robustness

Safety-oriented robustness focuses on adversarial attacks. For example, a vision system robust against adversarial attacks can withstand small perturbations designed by attackers to cause misclassification.

Trust-oriented robustness asks whether the system generalizes reliably across real-world conditions and diverse populations.

A diagnostic AI system may be robustly trained against adversarial examples but fail silently when deployed in a different hospital with different patient demographics, equipment, or clinical workflows.

Recent research starkly illustrates this gap: models adversarially trained to be robust against one type of perturbation (e.g., specific image distortions) often become less robust to other, unforeseen perturbations.

6. Ethics and accountability

Safety frames ethics as compliance: Does the system meet ethical guidelines? Have we conducted risk assessments? Do we document bias mitigation strategies? These are important but primarily process-oriented.

Trust frames ethics as relational integrity: Is the system actually fair across all groups it affects? Do stakeholders feel their values are respected? Is responsibility clear when the system causes harm? Does governance reflect the interests of affected parties, not merely developers?

This requires continuous engagement with diverse stakeholders, monitoring real-world impacts, and adapting governance as ethical issues emerge.

An algorithm designed for hiring can claim ethical compliance by documenting bias-mitigation techniques and internal fairness audits.

It earns trust by demonstrating that hiring outcomes are equitable across demographics, that candidates understand how they are evaluated, that recruiters maintain meaningful human judgment, and that the company adjusts the system based on feedback about fairness concerns.

Designing AI for safety focuses on preventing exploitation and system failure through rigorous internal testing, adversarial robustness and threat modelling, strong security controls (access management, encryption, monitoring), disciplined technical documentation of specifications and risks and penetration testing.

Designing for trust, on the other hand, goes further by ensuring the system reliably serves stakeholder needs in context, which requires engaging stakeholders across the full lifecycle, building transparency into the process (auditability, model provenance, and training-data documentation), providing explainability suited to multiple audiences (end-users, domain experts, regulators), validating performance externally through third-party review, maintaining meaningful human oversight rather than box-ticking, monitoring real-world outcomes across diverse populations and conditions, and sustaining governance and feedback loops that adapt when there is evidence of ethical or performance issues.

Investments required for trust

Building tools explicitly for trust often comes with a price. Yet these are the investments required when trust matters — in healthcare, finance, criminal justice, hiring, lending, and any domain where AI decisions directly affect human welfare.

Current regulatory frameworks increasingly recognize this distinction.

The EU AI Act categorizes certain applications as “high-risk” and demands extensive documentation, external auditing, and transparency for those systems precisely because safety alone is insufficient.

NIST’s AI Risk Management Framework explicitly addresses multiple dimensions of trustworthiness, not merely security or accuracy.

Emerging standards, such as ISO/IEC frameworks, are developing criteria for verifiable trustworthiness across fairness, explainability, and robustness.

As AI becomes increasingly embedded in decision-making affecting millions of people, the distinction becomes not merely academic but foundational. Organizations that recognize and invest in the difference are more likely to build systems worthy of genuine trust.

Safety is a necessary foundation. No doubt. But trust is a broader social and relational property. After all, no one wants to trust a system they know is insecure or unreliable.

The article originally appeared on Hindustan Times